Bayesian Causal Induction

…also known as Causal Discovery.

This talk was first presented at the 2011 NIPS Workshop on Philosophy and Machine Learning. The talk slides are here, and the workshop paper here. Please cite this as: Pedro A. Ortega. Bayesian Causal Induction. 2011 NIPS Workshop in Philosophy and Machine Learning.

If you are looking for a measure-theoretic formalization on causality that can describe induction, then check out causality.

Abstract: In this presentation, I will show that taking Shafer's idea of representing causal structures using probability trees and Pearl's notion of causal interventions we can do causal induction in a simple and elegant Bayesian way. Existing methods (e.g. the PC algorithm) do causal induction following a frequentist spirit by running a series of statistical tests that check conditional independencies. Instead, here we pursue a Bayesian approach:

- we place priors over causal hypotheses;

- we state likelihood functions;

- and we infer the posterior over causal hypotheses given the data (both interventional and observational).

This sounds straightforward at first—“it's like placing priors over causal DAGs”. However, this is actually false because causal hypotheses can possess recursive causal dependencies or “hyper-causes” that are much more expressive than causal DAGs.

Motivation

The problem of causal induction, namely the problem of finding out “what causes what”, has puzzled many philosophers such as Aristotle, Kant and especially Hume.

- Does the light turn on whenever I snap my fingers?

- Will the headache go away if I take a pain killer?

- Will I have a bad accident if a black cat crosses my path?

- Will my soul be saved if I join this religion?

More precisely, causal induction is defined as the ability to generalize from particular causal instances to abstract causal laws.

Let’s have a look at another example.

- “I had a bad fall on wet floor.” – that’s my experience.

- “Therefore, it is dangerous to ride a bike on ice.” – that’s my conclusion.

I concluded this because I learned, from my experience, that “a slippery floor can cause a fall”.

There are at least two important aspects to this example. First, we need to infer the causal direction: “Did the fall cause the wet floor or vice versa?”. Second, we need to extrapolate this causal knowledge to an unseen situation that shares some similarities. We will not treat this aspect of the induction problem here because it is essentially the same problem we would encounter in a normal sequence prediction setup.

The important point is that, for us, causal induction is a natural ability that we apply constantly throughout our lives – but how can we formalize it mathematically?

Causal Graphical Model

Let us rephrase the problem we are tackling in the language of causal graphical models.

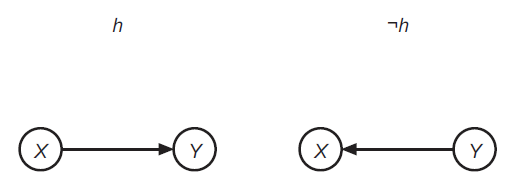

We have two random variables – $X$ and $Y$ – and we are pondering the plausibility of two competing causal hypotheses: either $X$ causes $Y$ or $Y$ causes $X$, which we label as $h$ and $¬h$ respectively. We assume that both hypotheses model identical joint distributions over $X$ and $Y$.

A lot of progress has been made in causal induction using machine learning methods. For example, there are methods based on conditional independence testing like the PC algorithm – that we cannot apply here because they require at least 3 random variables; other methods make additional assumptions, for instance about the nature of the noise. But here we are interested in the general case with no additional assumptions.

The first question that we address is: how do we express the causal induction problem using the language of graphical models?

Since we do not know the direction of the arrow, we have to treat is as a random variable – controlled by the causal hypothesis.

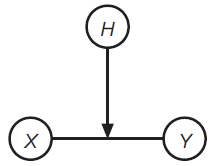

But now we have a problem. The causal hypothesis controls the very structure of the causal graph over the pair $X$ and $Y$, and hence $H$ lives in a meta-level.

This is problematic, because we do not know how to do inference when there are meta-levels using the language of graphical models alone. Furthermore, using an analogous argument, it is easy to build a hierarchy of meta-levels (by placing undirected arrows between variables in the meta-levels).

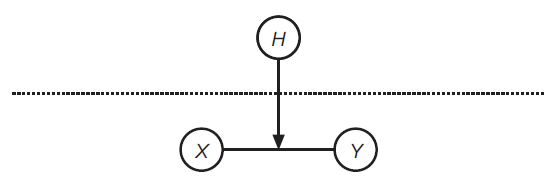

Is there a way to express this situation without recurring to meta-levels?

Important: Before you carry on, please make sure you understand the problem I'm trying to point out. If you are unsure, read the previous paragraph again, then think about it a little, and then carry on reading. I'm saying this because I've noticed that only few seem to get the point (or to care about it). I have so far not found a way to improve my poor explanation.

Probability Trees

Shafer proposed to use the simplest representation of a random experiment – actually the first representation we get taught in a probability course – namely probability trees.

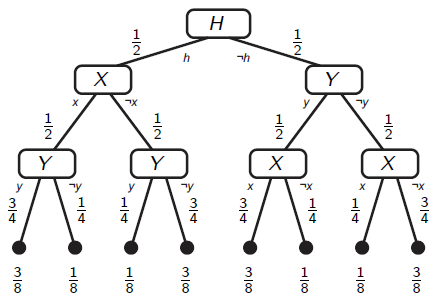

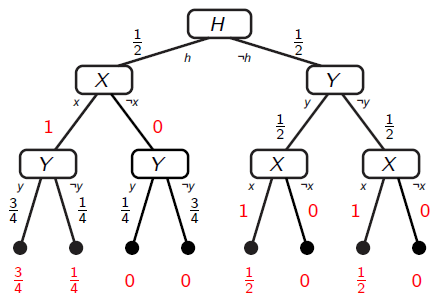

In this case, nodes represent mechanisms that resolve the value of a random variable given the history. For instance, the random variable $Y$, given that $h$ is the correct hypothesis and we have observed $\neg x$, takes the value $y$ with probability $\frac{1}{4}$ and the value $\neg y$ with probability $\frac{3}{4}$.

A path corresponds to a realization of the experiment – it tells us a story of how the random variables acquired their values and in what order.

Consequently, a tree is a representation of all the potential causal realizations of the experiment. We can even represent alternative causal realizations. For instance, the left branch corresponds to the case where $X$ causally precedes $Y$, and the right branch to the case where $Y$ causally precedes $X$.

Of course, a probability tree can also capture the conditional independencies, but it doesn’t do it in such an obvious way as in the case of graphical models.

First, note that all the random variables are first class citizens now – there are no meta-levels anymore!

Inferring the Causal Direction

Let us infer the causal direction.

Assume that we observe that $X$ takes the value $x$, and that $Y$ acquires the value $y$. What is the probability of the causal hypothesis $H = h$?

We use Bayes’ rule, placing a uniform probability distribution over the hypotheses.

The posterior probability of $h$ given $x$ and $y$ is thus the likelihood – factorized according to the causal dependencies of the left branch in the tree – multiplied by the prior probability, normalized by the probability of the data.

Note how the denominator has two different factorizations: one for each of the two sides of the tree.

Replacing the numbers, we obtain…

\begin{align*} P(h|x,y) &= \frac{P(y|h,x)P(x|h)P(h)}{P(y|h,x)P(x|h)P(h) + P(x|\neg h,y)P(y|\neg h)P(\neg h)} \\&= \frac{\frac{3}{4} \cdot \frac{1}{2} \cdot \frac{1}{2}}{\frac{3}{4} \cdot \frac{1}{2} \cdot \frac{1}{2} + \frac{3}{4} \cdot \frac{1}{2} \cdot \frac{1}{2}} = \frac{1}{2} = P(h)! \end{align*}

…$\frac{1}{2}$! That is, the prior probability of $h$!

We haven’t learned anything! This makes sense, because the two causal hypotheses differ in the causal order of the random variables, but they have identical likelihoods!

Thus, we invoke a fundamental insight of statistical causality: to extract new causal information, we have to supply old causal information, also paraphrased as “no causes in, no causes out” and “to learn what happens if you kick the system, you have to kick the system”.

More specifically, we can introduce causal information by intervening the experiment. Let us explore this.

Interventions in a Probability Tree

Let us quickly revise what we mean when we say that the likelihoods are the same, which I have written under the leaves of the tree. In the tree, the realization $x$, $y$ under hypothesis $h$ has probability $\frac{3}{8}$ – exactly the same as under hypothesis $\neg h$; the realization $x$, $\neg y$ has probability $\frac{1}{8}$ under hypothesis $h$ – again, like in hypothesis $\neg h$, and so forth.

We intervene the experiment by setting the value of the random variable $X$ to the value $x$. This means that I’m fixing the value of $X$ for all potential realizations of the experiment.

In the tree, this amounts to replacing all the nodes that resolve the value of $X$ with a new node that places all its probability mass on the outcome $X = x$.

Note that as a result of the intervention, the two hypotheses have different likelihoods now. The intervention has introduced a statistical asymmetry!

As a side note, I would like to point out that in this tree I could do all sort of crazy things, like intervening the very hypothesis!

Update: This is not the most general form of causal intervention. For an even more general definition of causal interventions in a probability tree, check out Measure-Theoretic Causality.

Inferring the Causal Direction – 2nd Attempt

Let us repeat the experiment, but this time setting the value of $X$ and observing the value of $y$. What is the posterior probability of $H = h$?

Again, we write down the posterior probability of $h$ and use Bayes’ rule, and we use Pearl’s hat symbol to denote the fact that we are doing our calculations using an intervened probability tree.

Again, we have the likelihood times the prior, where the likelihood has been factorized according to the causal order. Numerically, all the probabilities stay the same as in the un-intervened case, expect the probabilities where the intervened variable is in the argument.

\begin{align*} P(h|\hat{x},y) &= \frac{P(y|h,\hat{x}) P(\hat{x}|h) P(h)}{P(y|h,\hat{x}) P(\hat{x}|h)P(h) + P(\hat{x}|\neg h,y)P(y|\neg h)P(\neg h)} \\&= \frac{\frac{3}{4} \cdot 1 \cdot \frac{1}{2}}{\frac{3}{4} \cdot 1 \cdot \frac{1}{2} + 1 \cdot \frac{1}{2} \cdot \frac{1}{2}} = \frac{3}{5} \neq P(h). \end{align*}

As we would expect, the resulting posterior probability has changed now. In fact, since it has increased, we have gained evidence for the causal hypothesis “$X$ causes $Y$”.

This concludes our explanation of causal induction. We can apply the same technique to deal with more complex cases, for instance to infer causal dependencies in time series.

Conclusions

- Causal induction can be done using purely Bayesian techniques plus a description allowing multiple causal explanations of a random experiment.

- Probability trees provide a simple and clean way to encode causal and probabilistic information.

- The purpose of an intervention is to introduce statistical asymmetries, rendering the likelihoods different.

- The causal information that we can acquire is limited by the interventions we can apply to the system.

- Essentially, in this presentation I have shown that taking Shafer's idea of using probability trees and Pearl's idea of interventions is enough to do causal induction. I am not aware of any other work that has solved this problem using one representation.