Table of Contents

Information-Theoretic Bounded Rationality

Under construction.

Check out our latest summary paper:

Ortega, P.A., Braun, D.A., Dyer, J.S., Kim, K.-E., and Tishby, N.

Information-Theoretic Bounded Rationality

ArXiv:1512.06789, 2015

[PDF]

Bounded rationality is an active field of research in economics with important implications not only to behavioural economics, but also to computational neuroscience and especially artificial intelligence. In artificial intelligence, such a theory would provide a principled basis for the construction of controllers under resource limitations, which is widely regarded as one of the most important open problems of artificial intelligence. Needless to say, there is currently no commonly accepted mathematical theory of bounded rationality.

I have worked on a mathematical theory of bounded rationality that draws inspiration from statistical mechanics and information theory, where the central ingredient is the free energy functional. We have derived the free energy functional from axioms relating utilities with information. Our contribution has been to relate this to bounded rationality, highlight the connections to expected utility theory, and to extend it to decision trees with information constraints.

Classical Decision Theory

Classical decision theory characterizes the behavior of rational decision makers when facing uncertainty. Simply put, a decision maker can be characterized by four ingredients:

- a set of possible actions $\mathcal{A}$;

- a set of possible outcomes or consequences $\mathcal{X}$;

- a subjective utility function $U: \mathcal{X} \rightarrow \mathbb{R}$ assigning a numerical value $U(x)$ to each of the possible outcomes;

- a subjective probability distribution $P$ specifying the conditional probability $P(x|a)$ of an outcome $x \in \mathcal{X}$ given an action $a \in \mathcal{A}$.

The theory then says that a rational decision maker picks the action $a^\ast \in \mathcal{A}$ maximizing the expected utility of the outcome: \begin{align} a^\ast &= \arg\max_{a \in \mathcal{A}} \mathbf{E}[ U|a ] \\ &= \arg\max_{a \in \mathcal{A}}\sum_{x \in \mathcal{X}} P(x|a) U(x). \end{align} If there is more than one action that maximizes the expected utility, say any $a^\ast$ in a subset of actions $\mathcal{A}^\ast \subset \mathcal{A}$, then we can do one any of the following:

- pick any of the optimal actions $a^\ast \in \mathcal{A}^\ast$;

- or pick any probability distribution $P(a^\ast)$ over the maximizing set $\mathcal{A}^\ast$.

Picking an action according to this strategy is known as the maximum expected utility principle. A decision-making problem can be illustrated using a decision tree, like in the following example.

Example

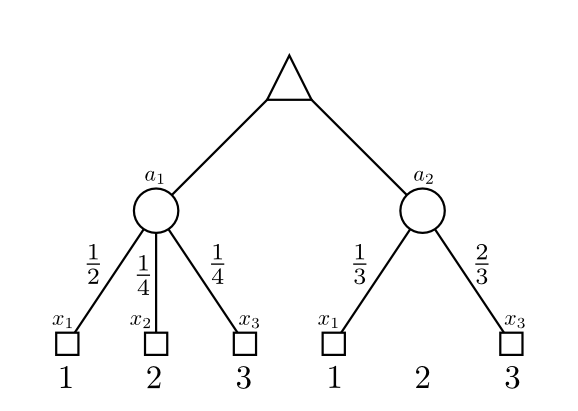

Consider the following decision-making scenario. In this figure, $\triangle$-nodes correspond to decision nodes where the decision-maker has to choose an action, and $\bigcirc$-nodes correspond to chance nodes where Nature randomly chooses an outcome.

Here, the objective consists in deciding whether to choose $a_1$ or $a_2$. The corresponding expected utilities are \begin{align} \mathbf{E}[U|a_1] &= P(x_1|a_1) U(x_1) + P(x_2|a_1) U(x_2) + P(x_3|a_1) U(x_3) \\ &= \frac{1}{2} \cdot 1 + \frac{1}{4} \cdot 2 + \frac{1}{4} \cdot 3 \\ &= \frac{7}{4} \end{align} \begin{align} \mathbf{E}[U|a_2] &= P(x_1|a_2) U(x_2) + P(x_2|a_2) U(x_2) + P(x_3|a_2) U(x_3) \\ &= \frac{1}{3} \cdot 1 + 0 \cdot 2 + \frac{2}{3} \cdot 3 \\ &= \frac{7}{3} \end{align} Since $\mathbf{E}[U|a_1] < \mathbf{E}[U|a_2]$, we choose $a^\ast = a_2$.

Sequential Decision-Making

The previous framework for one-step decisions generalizes easily to sequential decisions, i.e. decision trees with more than just a decision and chance level. Basically, in a sequential decision-making problem we have to choose a policy instead of a single action 1). A policy is a choice of a decision for every decision node in the tree.

Three important special cases in the literature are:

- bandits,

- Markov decision processes (MDPs),

- Partially observable MDPs (POMDPs).

Without going into the details, I just want to point out that the two former types are enormous simplifications because their decision trees are fractals, while POMDPs are roughly equivalent to decision trees where the nodes of the trees correspond to the POMDP's information states. The bottom line is that decision trees are rich enough to represent any of the classical sequential decision problems, and they are all solved using the same principle as in the one-step decision case. Dynamic programming, Bellman optimality equations, Expectimax, etc., while conceptually important, are all just consequences of the maximum expected utility principle.

Bounded Rational Decision Theory

Classical decision theory describes the choice patterns of a rational decision maker under risk. In this scenario, one assumes that the decision maker has a (probabilistic) model of the world, and that he chooses the action maximizing the expected utility of the outcome. For instance, in a roulette wheel, all the odds and payoffs are known, and thus placing an optimal bet simply boils down to the calculation of the expected payoff.

While this theory makes perfect sense when the model of the world is accurate, its application to cases where the model is ambiguous is inappropriate. For instance, when betting on horse racing, the decision maker has only an ambiguous model of the situation, and his degree of confidence depends heavily on his knowledge about the horses, the horsemen, and other conditions such as the track. Because such decision makers don't fully trust their models, they tend to spread their bets in order to protect themselves from a bad outcome. Similar situations arise when the decision maker is facing another agent or when operating a very complex machine. The difficulty of coming up with a reliable model can significantly influence his choice, reflecting either trust (for example, if he thinks that the agent or the machine is cooperating) or distrust (when he thinks that the agent or the machine is adversarial).

Free Energy Model

We have proposed a bounded rational decision theory based on the free energy functional. The ingredients are:

- a set of outcomes $\mathcal{X}$;

- a real valued parameter $\alpha$ indicating the degree of boundedness;

- a prior probability distribution $Q$ over $\mathcal{X}$.

Then, define the free energy functional 2) as \[ F_\alpha[P] = \left\{ \sum_{x \in \mathcal{X}} P(x) U(x) - \frac{1}{\alpha} \sum_{x \in \mathcal{X}} P(x) \log \frac{ P(x) }{ Q(x) } \right\}. \] The free energy functional can be motivated from statistical mechanical and information-theoretic principles. It corresponds to the expected utility (as in classical decision theory), with the important difference that it is regularized. It characterizes the equilibrium distribution, i.e. the probability distribution that extremizes the free energy functional: \[ P^\ast(x) = \frac{ Q(x) e^{\alpha U(x)} }{ \sum_{x' \in \mathcal{X}} Q(x') e^{\alpha U(x')} } \] Because the free energy functional is just a regularized utility, the equilibrium distribution is just a “regularized” optimal action. If we substitute the equilibrium distribution $P$ into the free energy functional, then we obtain the extremum \[ \frac{1}{\alpha} \log Z(\alpha) = \frac{1}{\alpha} \log \Bigl( \sum_{x'} Q(x') \exp\bigl\{ \alpha U(x') \bigr\} \Bigr) \] which plays the role of the value function and the certainty equivalent. Indeed, the free energy extremum is a generalization of the maximum, expectation and minimum operations:

- $\alpha \rightarrow +\infty$: \[ \frac{1}{\alpha} \log Z(\alpha) = \max_{x} U(x), \]

- $\alpha \rightarrow 0$ : \[ \frac{1}{\alpha} \log Z(\alpha) = \mathbf{E}_{Q} \bigl[ U(x) \bigr], \]

- $\alpha \rightarrow -\infty$ : \[ \frac{1}{\alpha} \log Z(\alpha) = \min_{x} U(x). \]

This property of the free energy functional has important applications to decision making, because it gives us the tools to rigorously characterize systematic deviations from expected utility theory in the presence of ambiguity. There are at least two important sources of ambiguity:

- Lack of control. A decision maker can have varying degrees of control over the outcome of a random experiment. For instance, in the game backgammon, the `white' and the `black' players take turns in moving the tokens, and there is also an element of chance (rolling the dice). If the decision maker is, say, the `white' player, then he has only control over the white tokens (positive $\alpha$). However, he has no control over the outcome of rolling the dice ($\alpha = 0$), and he expects the oponent to make adversarial moves (negative $\alpha$).

- Lack of knowledge. A decision maker can also have different degrees of confidence in his model. Three representative scenarios correspond to playing roulette ($\alpha = 0$) and betting on horse races ($\alpha < 0$ if he is not very confident, and $\alpha > 0$ if he's `feeling lucky').

References

Our contributions, in chronological order.

[1] Ortega, P.A. and Braun, D.A.

Information, Utility and Bounded Rationality

The fourth conference on artificial general intelligence, pp. 269-274, 2011.

[PDF]

[2] Ortega, P.A.

PhD Thesis, Dept. of Engineering, University of Cambridge, 2011.

[PDF]

[3] Braun, D.A. and Ortega, P.A. and Theodorou, E. and Schaal, S.

Path Integral Control and Bounded Rationality

2011 IEEE Symposium on Adaptive Dynamic Programming and Reinforcement Learning, Paris.

[PDF]

[4] Ortega, P.A. and Braun, D.A.

Proceedings of the Royal Society A 20120683, 2013.

[PDF] (Email me for this paper.)

[5] Ortega, P.A. and Braun, D.A.

European Workshop on Reinforcement Learning 2012

[PDF]

[6] Ortega, P.A. and Lee, D.D.

An Adversarial Interpretation of Information-Theoretic Bounded Rationality

Twenty-Eighth AAAI Conference on Artificial Intelligence (AAAI '14), 2014

[PDF]